var db = Database.OpenNamedConnection("dbConnection");

var list = new List<User>();

for (var i = 0; i < 10; i++)

list.Add(new User {

Id = i + 10000, Username = "User" + i,

Password = "Pwd" + i,

DateCreated = DateTime.Now.AddDays(-i),

RoleId = (i % 3)

}

);

//All users are inserted into the database

// with this single call

db.User.Insert(list);

When bulk inserting into SQL Server Simple.Data makes a call to the ADO.NET DbCommand.Prepare() which actually creates a compiled version of the insert statement on the server itself . Then insert statements are then run one by one which should improve performance. However you may not see and performance gain if you are only bulk inserting 2 or 3 rows as there is a small upfront overhead. This compiled temporary stored procedure will be destroyed when the current connection is closed. The SQL profiler shows us:- declare @p1 int

set @p1=-1

exec sp_prepexec @p1 output,N'

@p0 int,

@p1 varchar(50),

@p2 varchar(50),

@p3 datetime,@p4 int',

N'insert into [dbo].[User] ([Id],[Username],[Password],

[DateCreated],[RoleId]) values (@p0,@p1,@p2,@p3,@p4)',

@p0=10000,@p1='User0',@p2='Pwd0',

@p3='Nov 29 2011 8:59:44:897PM',@p4=0

select @p1

and then sends each insert as:- exec sp_execute 1,@p0=10001,@p1='User1',@p2='Pwd1', @p3='Nov 28 2011 8:59:44:897PM',@p4=1 exec sp_execute 1,@p0=10002,@p1='User2',@p2='Pwd2', @p3='Nov 27 2011 8:59:44:897PM',@p4=2 exec sp_execute 1,@p0=10003,@p1='User3',@p2='Pwd3', @p3='Nov 26 2011 8:59:44:897PM',@p4=0Note: In the case of Insert you get back a new list of objects with any database-assigned default values such as identity values or timestamps.

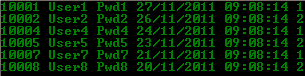

The following code is just a check to see that the inserts worked:-

foreach (var item in db.User.All())

Console.WriteLine(string.Concat(

item.Id, " ",

item.Username, " ",

item.Password, " ",

item.DateCreated, " ",

item.RoleId)

);

I believe before version 1 release Mark is going to create an Upsert which will either update or insert your entity based on whether the record exists in the database.

Since writng this post, Mark has also done some macro optimisations using Bulk insert which makes use of SqlBulkCopy. Read this blog post to find out more.